Ethical AI: Addressing Bias and Ensuring Fairness in Machine Learning Models

As artificial intelligence (AI) becomes increasingly integrated into every aspect of our lives, from healthcare to finance, criminal justice, and hiring, the ethical implications of these technologies have come to the forefront. One of the most critical ethical concerns in AI is the potential for bias in machine learning (ML) models, which can lead to unfair or discriminatory outcomes. These biases often arise from the data used to train AI systems or the way the models are developed, but they can have profound real-world consequences, perpetuating societal inequalities.

Ethical AI—AI that is fair, transparent, and responsible—is essential for ensuring that machine learning models benefit society as a whole, rather than reinforcing existing biases. In this article, we will explore how bias emerges in machine learning, the consequences of biased models, and strategies for ensuring fairness and accountability in AI systems.

1. Understanding Bias in Machine Learning

Bias in machine learning occurs when a model produces results that are systematically prejudiced due to flawed assumptions, data, or algorithms. Bias can manifest in various ways, including through skewed data, unrepresentative training datasets, or biased decision-making processes. In ML, these biases can affect predictions and decisions, such as whether a loan is approved, which job applicants are selected, or how law enforcement agencies allocate resources.

- Data Bias: Machine learning models are only as good as the data they are trained on. If the training data contains biases—such as historical inequalities or underrepresentation of certain groups—the model will likely reflect and perpetuate those biases. For example, if a facial recognition system is trained predominantly on images of white faces, it may perform poorly on individuals with darker skin tones, leading to biased and inaccurate results.

- Sampling Bias: This occurs when certain groups or categories are overrepresented or underrepresented in the training dataset. For example, an AI system trained on data from predominantly urban areas might struggle to make accurate predictions in rural settings, leading to unfair or inaccurate outcomes for people in those areas.

- Algorithmic Bias: Even if the data itself is balanced, the algorithms used to process the data may introduce biases. Certain algorithms might inadvertently prioritize specific features or make decisions based on variables that have indirect correlations to sensitive attributes like race, gender, or socioeconomic status.

2. The Consequences of Bias in AI Models

The consequences of bias in AI models can be far-reaching, with potential harm to individuals and society. AI systems, when biased, may perpetuate existing inequalities and disproportionately impact marginalized or vulnerable groups. Some of the most notable consequences include:

- Discrimination in Hiring and Employment: Biased algorithms used in recruitment or performance evaluation systems can unfairly disadvantage certain groups based on gender, ethnicity, or age. For instance, a hiring algorithm that’s trained on data from predominantly male applicants may unintentionally favor male candidates, while unintentionally discriminating against qualified female or non-binary candidates.

- Financial Inequality: AI-driven credit scoring models and loan approval systems may perpetuate existing biases in the financial sector. If a machine learning model is trained on biased historical financial data, it may unfairly deny loans to individuals from certain racial or socioeconomic backgrounds, reinforcing economic disparities.

- Unfair Legal and Criminal Justice Outcomes: Machine learning models are increasingly being used in the criminal justice system, such as risk assessment tools that predict the likelihood of a defendant reoffending. If these models are trained on biased historical data, they may unfairly target certain racial or ethnic groups, leading to inequitable sentencing and parole decisions.

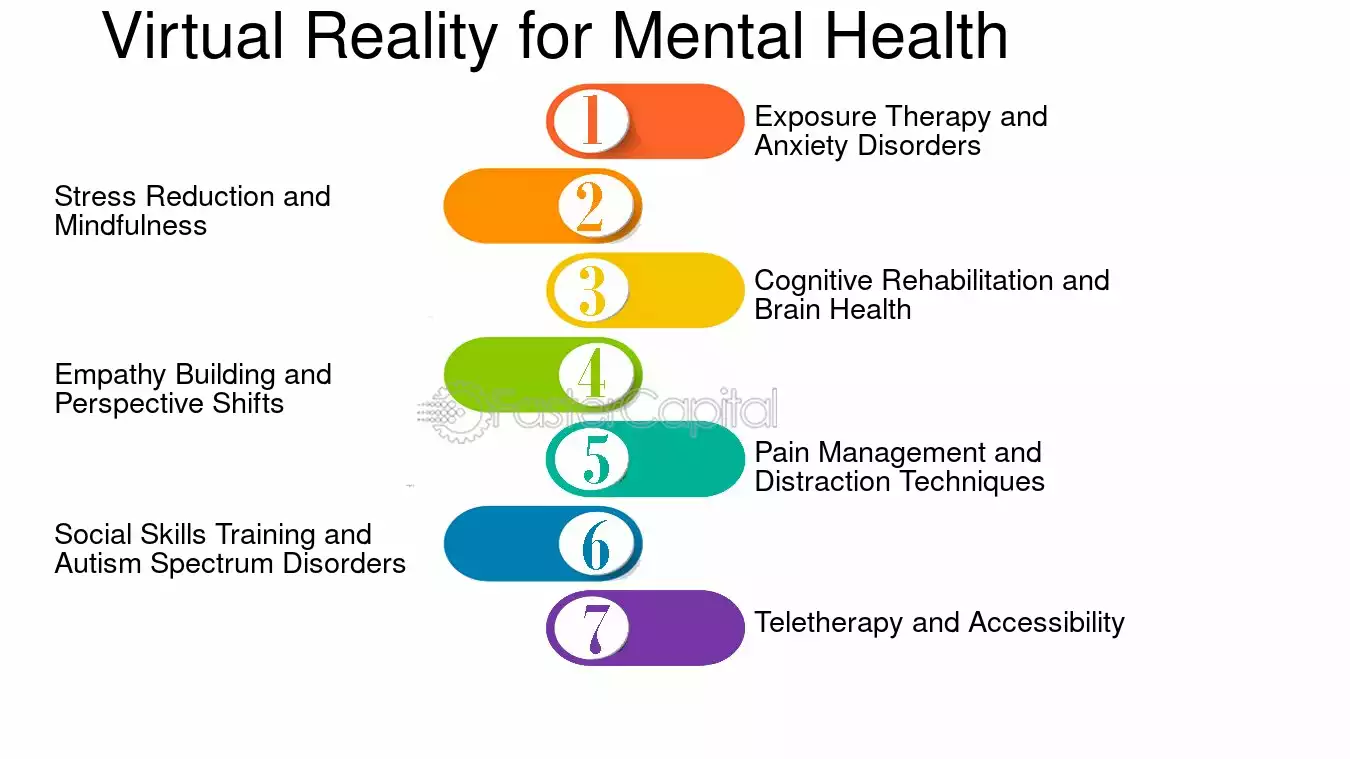

- Health Disparities: In healthcare, biased AI models can lead to misdiagnoses or unequal treatment recommendations. For instance, if an AI system is trained on healthcare data that predominantly represents one demographic, such as middle-aged white men, it might miss crucial health risks in other populations, leading to less effective care for women, people of color, or other underserved groups.

3. Ensuring Fairness in AI

The first step toward ethical AI is ensuring fairness—designing and implementing machine learning models that do not disproportionately disadvantage any group or individual. Achieving fairness in AI is complex and requires addressing several key considerations:

- Data Representation and Diversity: To ensure fairness, it is critical that the data used to train machine learning models is diverse and representative of all relevant groups. This means collecting data from a variety of sources and ensuring that underrepresented groups are adequately included. For instance, in healthcare, ensuring that AI models are trained on data from diverse patient populations can help prevent healthcare disparities.

- Bias Detection and Auditing: Regularly auditing machine learning models for potential biases is crucial for maintaining fairness. Organizations can use tools to detect bias in AI systems, such as fairness-aware algorithms, which analyze the model’s predictions across different demographic groups. If discrepancies are found, corrective measures can be taken, such as retraining the model or adjusting its features to reduce bias.

- Fairness Metrics: There is no one-size-fits-all definition of fairness, but it is important to adopt fairness metrics that align with the desired outcomes. Some commonly used fairness metrics include demographic parity (ensuring equal representation across groups) and equal opportunity (ensuring that outcomes are equally favorable for all groups). These metrics can help guide decision-making throughout the development and deployment of machine learning models.

- Transparency and Explainability: To build trust and ensure accountability, AI systems must be transparent. This means making AI models interpretable and explainable so that stakeholders—whether customers, employees, or regulatory bodies—can understand how decisions are being made. Explainability can also help uncover any biases in the system. For instance, if a hiring algorithm rejects certain candidates, it should be able to explain why, allowing human reviewers to assess whether the decision was fair.

4. Addressing Bias with Techniques and Tools

Several machine learning techniques and tools can be implemented to mitigate bias and enhance fairness in AI models:

- Bias Mitigation Algorithms: Various algorithms and techniques can be used during the model training process to minimize bias. These include re-weighting training data to ensure equal representation of all groups, adversarial debiasing, which uses two models to reduce bias, and fair representations, which aim to eliminate sensitive information from the training data while preserving its predictive power.

- Fairness Constraints: During the model-building process, fairness constraints can be applied to ensure that the model’s predictions do not disproportionately harm any particular group. This can include imposing fairness criteria such as equal error rates across different demographic groups or ensuring that the decision-making process is not influenced by protected attributes such as race, gender, or age.

- Inclusive Design and Development Teams: One key strategy to reduce bias is to ensure that diverse perspectives are involved in designing and developing machine learning models. Teams that are representative of different genders, races, and backgrounds are more likely to identify potential biases in AI systems and implement strategies to mitigate them.

5. Ethical Guidelines and Governance in AI

Developing and adhering to ethical guidelines is essential for ensuring that AI systems are fair and responsible. Ethical AI frameworks provide organizations with principles to follow and help ensure that AI is used in ways that benefit all individuals. Some important ethical considerations include:

- Accountability: Clear lines of accountability must be established for the outcomes of AI systems. Organizations must take responsibility for the decisions made by their AI models and be prepared to rectify any harms caused by biased systems.

- Continuous Monitoring and Improvement: Ethical AI is an ongoing process. Businesses must commit to continuously monitoring AI models for bias, updating them regularly, and adapting them to changing societal norms and regulations. This ongoing effort helps ensure that AI systems remain fair and trustworthy over time.

- Legal and Regulatory Compliance: Various regulations, such as the General Data Protection Regulation (GDPR) in Europe and California’s Consumer Privacy Act (CCPA), are beginning to set standards for AI fairness, privacy, and accountability. Adhering to these regulations is essential for businesses to ensure they remain compliant and avoid legal repercussions.

6. The Future of Ethical AI

As AI technologies continue to evolve, the need for ethical considerations will only become more pressing. Ensuring fairness and addressing bias in machine learning models is not just a technical challenge but also a societal one. The future of AI must be one where technological advancements are aligned with the broader goals of justice, equality, and human well-being.

- AI Regulation and Policy: Governments, organizations, and industry bodies are beginning to take steps toward regulating AI. Global efforts to create standards for ethical AI, such as the OECD’s Principles on Artificial Intelligence and the EU’s AI Act, will play a crucial role in shaping the responsible development and deployment of AI technologies worldwide.

- Public Awareness and Engagement: Public understanding of AI’s ethical implications is crucial to ensuring that these technologies are developed responsibly. Engaging with diverse communities, raising awareness about AI’s potential risks, and fostering a collaborative approach to ethical AI development will be key to achieving widespread fairness in machine learning models.

Conclusion: Moving Toward Fair and Responsible AI

As artificial intelligence continues to revolutionize industries and transform society, addressing bias and ensuring fairness in machine learning models is more important than ever. By prioritizing ethical considerations, adopting fairness-aware algorithms, and establishing robust governance frameworks, businesses and policymakers can create AI systems that are not only powerful but also equitable and inclusive. The journey toward ethical AI is ongoing, but through collaboration, transparency, and accountability, we can harness the power of AI to create a more just and fair world for everyone.

It’s so important to remember gambling should be fun, not a source of stress. Seeing platforms like Spintime focus on data & responsible play is great! Check out the spintime app download apk for insights, but always set limits & know when to stop. 😊